Tutor Kai

Tutor Kai is designed as an generative artifical intelligent programming tutor I developed as part of my PhD research to give students a way to use GenAI for programming help without simply getting the solution. Deployed in two university courses with over 200 students over multiple semesters since 2023, the system supports Python, Java, and C++ integrated in the GOALS Learning Management System.

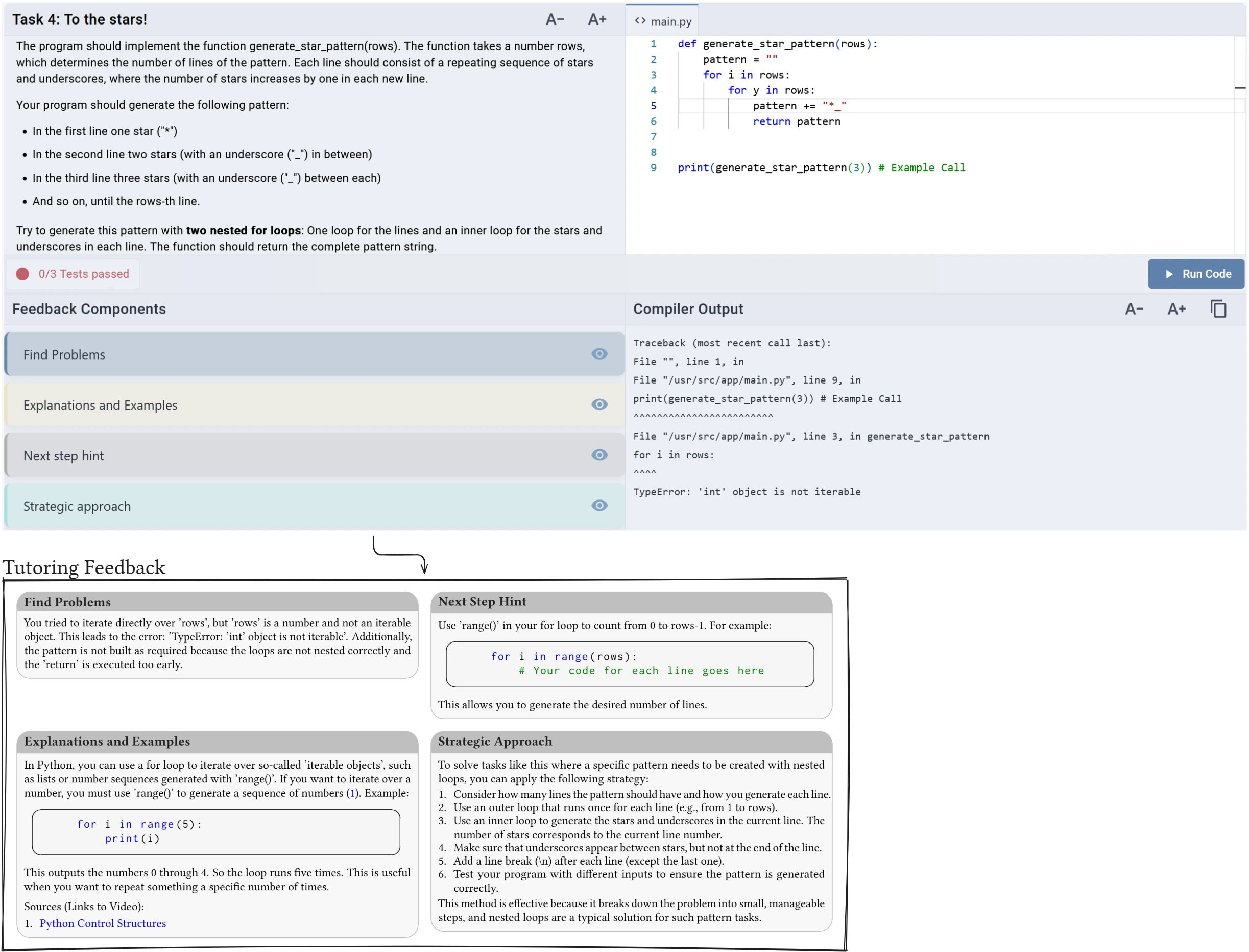

Tutoring Feedback

Since 2025 Tutor Kai generates four distinct types of formative and elaborated feedback 1 (based on Keuning et al., 2019 and Narciss, 2006):

-

Find Problems (KM): Describes students' mistakes. If the mistake relates to a compiler error, a brief explanation of the compiler message is provided. Relevant information from the task description helps clarify the mistake.

-

Explanations and Examples (KC): Explains concepts relevant to the student's mistakes identified in KM. Provides generic code examples for illustration and cites relevant lecture materials (video recordings) when available. This offers additional scaffolding, as students see an exemplary implementation they must adapt to their own problem.

-

Next Step Hint (KH): Provides an actionable next step to guide students toward correcting their mistakes. May include a short code snippet, but never the complete solution.

-

Strategic Approach (SPS): Provides a general, step-by-step description of procedures for solving similar tasks. Does not provide the correct solution, but strategic processing steps (Kiesler, 2022) to prevent trial-and-error behavior.

Technical Implementation

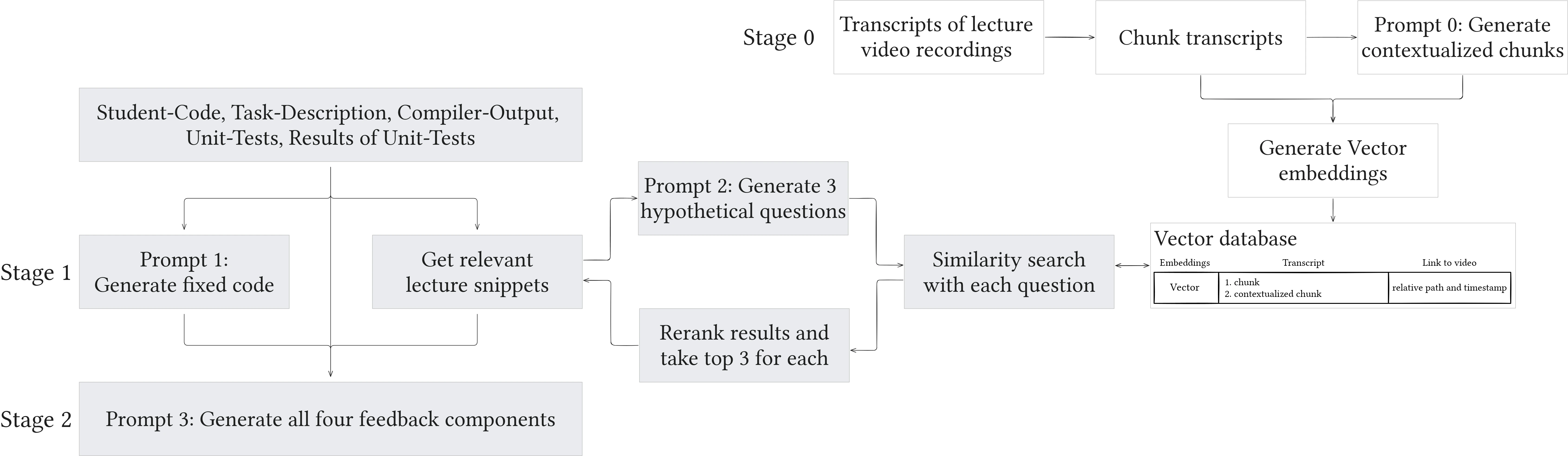

To address the pedagogical need for more concept explanations, I integrated a Retrieval Augmented Generation (RAG) pipeline that searches lecture video transcripts for relevant content. When generating the "Explanations & Examples" component, the system includes lecture video segments as inline citations with timestamps. Students can also ask follow-up questions about any feedback component, opening a context-aware chat interface.

History and Iterations

2023

I began developing Tutor Kai in 2023, shortly after completing my master's degree, driven by the goal of giving students access to ChatGPT's capabilities without the risk of simply requesting complete solutions. The initial demo was offered alongside JACK to students in the Object-Oriented and Functional Programming course during summer semester 2023 2 3, funded by university quality improvement initiatives. The system started with Python tasks only, but high student interest prompted rapid expansion during the semester to include Java support as well.

During evaluation of the feedback types, I noticed that the system frequently generated "Knowledge about Mistakes" and "Knowledge about How to Proceed," but less frequently "Knowledge about Concepts." Since concept explanations are pedagogically important, I extended Tutor Kai with a Retrieval Augmented Generation (RAG) pipeline 4. This system searches lecture video transcripts and integrates relevant segments into the prompt, allowing Tutor Kai to provide inline citations linking to specific moments in lecture recordings.

2024

In subsequent semesters, I integrated Tutor Kai into the GOALS Learning Management System I was developing and added C++ support for the Algorithms and Data Structures course.

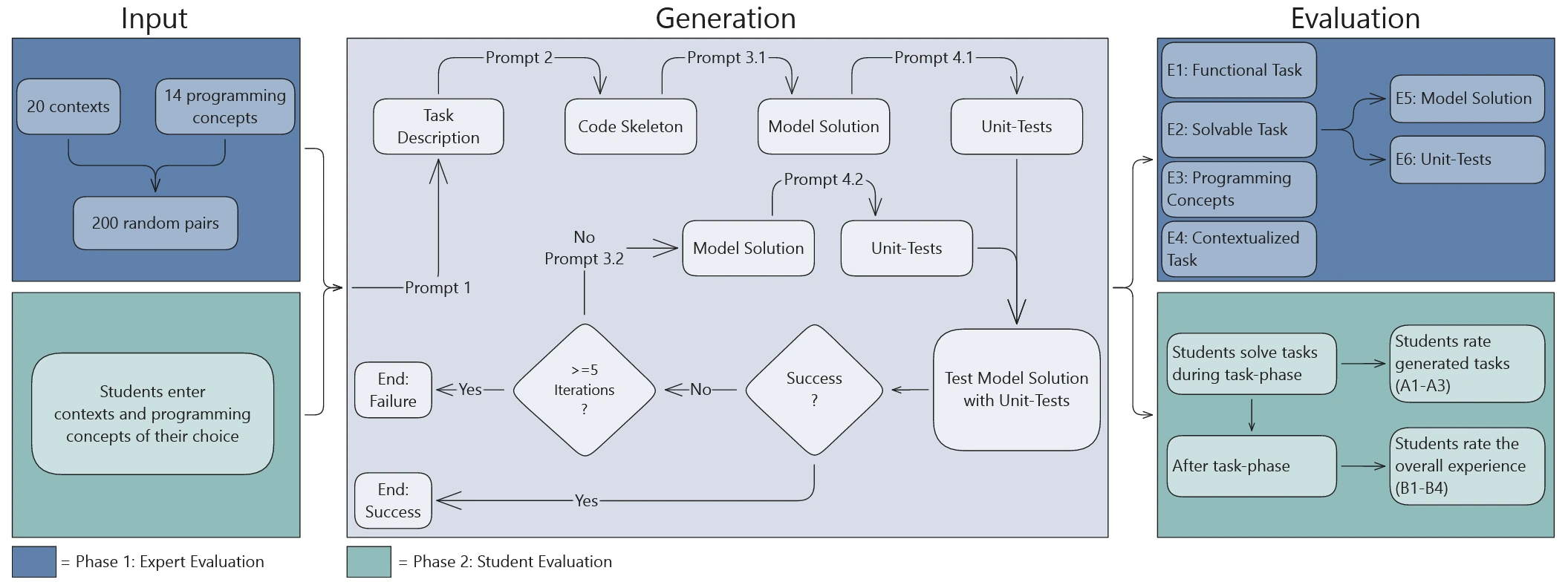

In the summer semester, we implemented the ability for students to generate their own tasks with self-selected concepts and contexts 5. We also evaluated the feedback system through think-aloud and eye-tracking studies 6. The findings show that more experienced students benefit more from the GenAI feedback than less experienced students, because less experienced students were sometimes unable to comprehend the given information. This occurred when the GenAI feedback referred to fundamental conceptual knowledge (e.g., 'loop') unfamiliar to the students without sufficient explanation or illustrative examples, or when students grasped the semantic solution but were unfamiliar with the necessary Python syntax, which the GenAI feedback failed to provide 6.

For the winter semester (Algorithms and Data Structures), I experimented with letting students choose feedback helpfulness levels, ranging from minimally helpful (Socratic questions) to very helpful (all feedback types with extended explanations and code examples). The aim was to mitigate the problems observed in the summer semester (see paragraph above) and provide better support for less experienced students. Students overwhelmingly selected the very helpful option despite its length but indicated a desire for more granular control over specific feedback types rather than overall helpfulness levels.

2025

Therby, I developed a fully automatic adaptive system with a feedback router that used learning analytics data to determine appropriate feedback types. However, this proved inconsistent and raised EU AI Act compliance concerns—creating student profiles through black-box GenAI. A second approach let students choose feedback types before generation, but this caused coherence issues when types were generated separately (e.g., Knowledge about how to proceed not matched Mistakes identified by Knowledge about Mistakes).

This led to the adaptive tutoring feedback architecture for the sommersemester 2025: automatic generation of all feedback types simultaneously, with students deciding which components to collect. I conducted a think-aloud and eye-tracking study with 20 students to examine how they engage with different feedback types 1, and collected log data throughout the semester (currently under review). Based on these findings, I added the ability to ask follow-up questions about any individual feedback component for the wintersemester 2025 / 2026.

I also integrated the tutoring feedback component for UML modeling tasks and interactive tasks for graph and tree algorithms (see GOALS). The evaluation of this integration is ongoing.

Intro Video for Students (Winter Semester 2025/26)

GenAI Voice Mode

The voice mode provides real-time spoken interaction with Tutor Kai using OpenAI's Realtime API with multimodal GenAI. It presents feedback in another modality (verbal). Students can talk to the AI tutor naturally about their programming problems, supporting use cases like debugging, pair programming, and brainstorming. Voice interfaces offer potential accessibility benefits for learners with visual impairments or neurodiverse needs, and enable a more natural tutoring conversation compared to typing while keeping eyes and attention on code. The system provides context-aware responses with access to the student's code, compiler output, task description, and dialogue history. The system was evaluated with 9th-grade students over multiple weeks in an authentic classroom, where it supported extended dialogues (averaging 24 messages per interaction).

The primary technical limitation is code verbalization. Despite prompting the AI to "describe code colloquially" it struggles with programming symbols and syntax, sometimes attempting to literally pronounce them rather than describing their meaning naturally. This leads to incorrect feedback and can create confusion for students. Because of the low feedback correctness (71.4%) and high cost, the GenAI Voice Mode needs further development and evaluation before deployment to all students 7.

Generating Tasks

Students can generate unlimited personalized Python programming tasks by entering any programming concepts they want to practice and any context they find interesting. The system produces complete task packages: problem description, code skeleton, unit tests, and model solution.

Expert evaluation of 200 generated tasks 5 shows strong results: 89.5% produce functional code, 92.5% are solvable with sufficient information, and 100% successfully incorporate student-chosen contexts. Student evaluation confirmed high satisfaction with 79.5% task completion rates. Currently we do not provide this option directly to students, because 10% error rate is still too high and could lead to frustration. Instead we extended the system to also generate Java and C++ tasks and use it with an educator in the loop approach to assist tutors create new tasks.